MIT.nano Immersion Lab and NCSOFT seek to speed innovations in hardware and software

The MIT.nano Immersion Lab Gaming Program seeks to chart the future of how people interact with the world and each other via hardware and software innovations in gaming technology. A recent virtual workshop sponsored by MIT.nano and gaming company NCSOFT highlighted MIT research that uses these technologies to blend the physical and digital worlds.

The workshop, held on April 30, was also intended to brief MIT principal researchers on the gaming program’s call for proposals for its 2020 Seed Grants. Leaders from the MIT.nano Immersion Lab and NCSOFT spoke to approximately 50 MIT faculty, researchers, and students on the lab’s capabilities and the gaming program. This was followed by three presentations from MIT researchers on understanding human movement, bringing augmented reality to opera, and teaching quantum computing using virtual reality.

NCSOFT, a video game development company, joined with MIT.nano to launch the MIT.nano Immersion Lab Gaming Program in 2019. As part of the collaboration, NCSOFT provided funding to acquire hardware and software tools to outfit the MIT.nano Immersion Lab as a research and collaboration space for investigations in artificial intelligence, virtual and augmented reality (VR/AR), artistic projects, and other explorations at the intersection of hard tech and human beings.

Joonsoo Lee, technical director of the NCSOFT AI Center, told the group that the seed grants are meant to foster new technologies and paradigms for the future of gaming and other disciplines. “We are good at software and games, MIT.nano has strong power on the hardware side, and the MIT community has many experiences in innovation,” Lee said. “I believe if we combine these, we can do something very new and very innovative for the future.”

MIT.nano Associate Director Brian Anthony and MIT.nano Immersion Lab Director Megan Roberts gave an update on the tools and resources at the Immersion Lab. Capabilities of the facility currently include tools for creating, testing, and experiencing immersive environments. These tools include photogrammetric scanners for creating digital assets and environments from physical objects and spaces, multi-user walk-around virtual reality, a motion capture studio, and the computational resources to generate multidimensional content. The Immersion Lab is also collaborating with the MIT Clinical Research Center (CRC) to bring together the CRC’s clinical measurements and monitoring with the Immersion Lab’s tools and simulation capabilities.

The workshop also highlighted the breadth of hardware and software research related to immersive experiences across MIT departments and laboratories. Three MIT principal investigators presented current projects as part of the workshop.

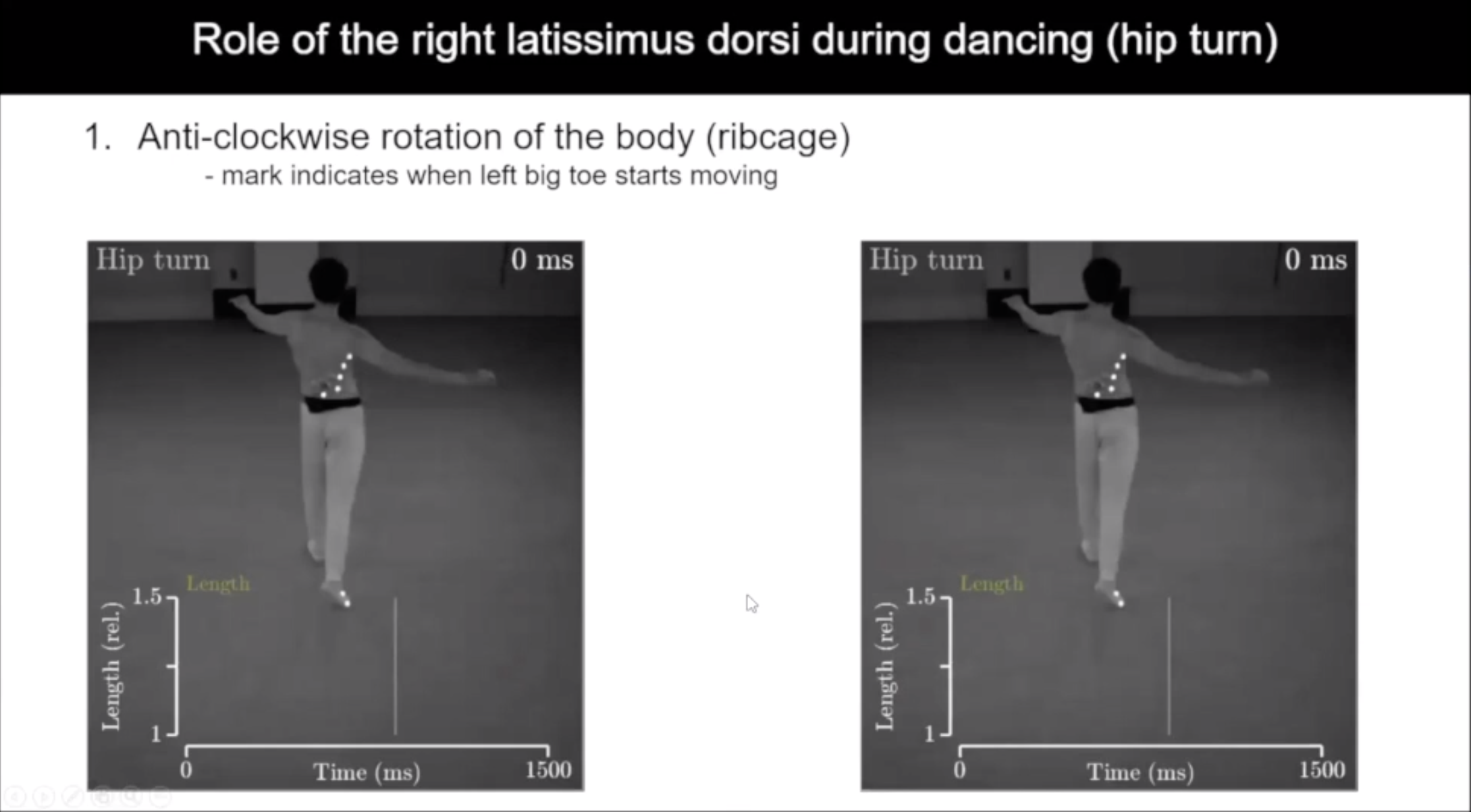

Dance-inspired investigation of human movement: Professor Luca Daniel, a 2019 NCSOFT seed grant awardee, is studying how to replicate human movements in virtual characters. Postdoctoral Associate Praneeth Namburi working in Daniel’s computational prototyping research group demonstrated their research on dancers’ approach to movement, which they hypothesize is done via stretch-based joint stabilization. Namburi has been using motion tracking and electromyography in the MIT.nano Immersion Lab to visualize changes in muscles and joints during physical movements.

Augmenting opera: Jay Scheib, a professor in Music and Theater Arts, focuses on make opera and theater a more immersive event by bringing live cinematic technology and techniques into theatrical forms. In his productions, the audience experiences two layers of reality—the making of the performance and the performance itself, through live production and simultaneous broadcast. Scheib’s current challenge is to create a production using AR techniques that would make use of existing extended reality (XR) technology such as live video effects and live special effects processed in real time and projected back onto a surface or into headsets.

The Quantum Arcade: Chris Boebel, the media development director for MIT Open Learning, and Will Oliver, an associate professor in Electrical Engineering & Computer Science, are developing an online course on quantum computing. As they were building the course, Boebel and Oliver found they were using a lot of 2-dimensional visualization of quantum computing fundamentals.

To improve the learning experience, the two teamed up with virtual reality developer Luis Zanforlin to create Qubit Arcade, a VR model of quantum computing. The arcade provides a hands-on sensory experience for students to manipulate qubits in a 3-dimensional space, as well as see the qubit from the inside. Boebel, Oliver, and Zanforlin are also working on a spatial circuit that allows multiple users to compose and run an algorithm in the same virtual space. The team hopes to use the MIT.nano Immersion Lab to bring students together to visualize and experiment with quantum computing in the Qubit Arcade.

The workshop concluded with an explanation of this year’s MIT.nano Immersion Lab Gaming Program call for software and hardware research proposals related to sensors, 3D/4D interaction and analysis, AR/VR, and gaming. Applications are due May 28.